A Mass-Produced Sociable Humanoid Robot: Pepper: The First Machine of Its Kind

Jun 11, 2019

By Amit Kumar Pandey and Rodolphe Gelin

As robotics technology evolves, we believe that personal social robots will be one of the next big expansions in the robotics sector. Based on the accelerated advances in this multidisciplinary domain and the growing

number of use cases, we can posit that robots will play key roles in everyday life and will soon coexist with us, leading all people to a smarter, safer, healthier, and happier existence.

The Pepper robot, developed by SoftBank Robotics, is one such robot created with the goal of achieving this vision. This article aims to present the insights derived from the design and applications of this machine and illustrate some of the use cases and research projects involving SoftBank Robotics to better understand the companion relationship between human and robot achievable through Pepper. We conclude by outlining some of the grand challenges ahead for research and development (R&D).

A Business-to-Everything Robot

There is no doubt that robots are becoming commonplace: robotic machines of all shapes and sizes are now entering our day-to-day life, and we witness them assisting, working, and serving at shopping malls, hospitals, museums, railway stations, elder-care facilities, schools, and homes. These robots are increasingly being deployed to assist humans in many different ways. With all these potential applications and the possibility of mass production and deployment of robots (as illustrated and discussed in the section “Application Potential and Acceptance”), these machines could become ubiquitous and eventually enrich our lives in manifold ways.

In this regard, robots capable of exhibiting sociability and achieving widespread societal acceptance are needed more than ever. Such sociable robots’ shape, size, look, behavior, and intelligence must all be customized and designed taking into account that they will be working in a human-centered environment. This was the idea behind the development of the Pepper robot by SoftBank Robotics (https://www.ald.softbankrobotics.com/en). Although Pepper was initially designed for a particular application of business-to-business (B2B) uses in SoftBank stores, the robot became a platform of interest all around the world for various other applications, including in the business-to-consumer (B2C), business-to- academics (B2A), and business-to-developers (B2D) areas and in a variety of use cases. For example, the Pepper robot is currently deployed in thousands of homes and schools, and it has been selected as the robotic platform for the RoboCup@ Home (http://www.robocupathome.org) Social Standard Plat- form League (SSPL) competitions.

A Global Overview of Pepper

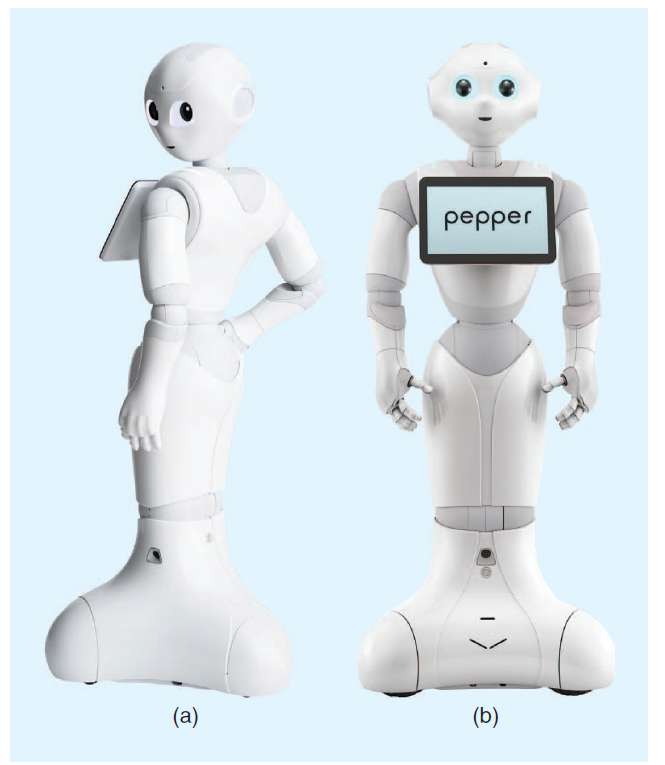

Figure 1. The SoftBank Robotics Pepper robot. (Image courtesy of SoftBank Robotics.)

Pepper (Figure 1) is an industrially produced humanoid robot launched in June 2014 that was first created for B2B needs and later adapted for B2C purposes. The machine is capable of exhibiting body language, perceiving and interacting with its surroundings, and moving around. It can also analyze people’s expressions and voice tones, using the latest advances and proprietary algorithms in voice and emotion recognition to spark interactions. The robot is equipped with features and high-level interfaces for multi-modal communication with the humans around it.

Pepper is a 1.2-m-tall wheeled humanoid robot, with 17 joints for graceful and expressive body language, three omnidirectional wheels to move around smoothly, approximately 12 h of battery life for nonstop activities, and the ability to return to the recharging station, if required. It is a carefully shaped robot, without any sharp edges, for a more appealing and safer presence in the human environment. Soft parts in some joints (e.g., the elbow, shoulder, and hip) prevent the risk of pinching. The machine’s size and look aim to make it appropriate and acceptable in daily life for interacting with human beings. It is designed for a wide range of multi-modal expressive gestures and behaviors and is equipped with a tab- let (which also makes development and debugging convenient).

The Need and the Design Principles

Before becoming SoftBank Robotics, Aldebaran Robotics (founded by Bruno Maisonnier) was involved in the Romeo project—and later in a follow-up project, Romeo2 (http:// projetromeo.com)—with the goal of creating a daily-life-com- panion humanoid robot capable of providing physical and cognitive assistance to people needing support. Some of the interesting outcomes of the projects also included data on users’ expectations about the robot’s shape, size, and behavior. These revealed that people expect such robots to be taller than the 58 cm of the NAO robot (https://www.ald.softbank robotics.com/en/robots/nao) for some day-to-day interaction contexts but at the same time not taller than the height of an average person sitting in a chair. Such studies pointed up the need for investigating the next generation of personal and human-centered service robots.

At the same time, under the strong guidance of chief operating officer Masayoshi Son, SoftBank (http://www.softbank. jp/en/) sought to develop a robot to meet its B2B needs, help reduce the workload of its store staff, and attract more customers. In so doing, the company significantly advanced the vision of achieving a new generation of humanoid robot and,

hence, initiating a new chapter in robotics: the development of the Pepper robot (aptly nicknamed Juliet at the time, for what was a secret program following the Romeo project). Pepper was initially designed for B2B but with the hope that, at least in Japan, it could intrigue and attract consumers. Therefore, the anticipated need of the Japanese B2C market in the coming years was also incorporated in the business plan.

At the time, there were various advanced humanoid robots (some also part of a series)—e.g., Advanced Step in Innova- tive Mobility (ASIMO), Baxter, Compliant Humanoid Platform (COMAN), Exciting Nova on Network (Enon), Humanoid for Open Architecture Platform (HOAP), Humanoid Robotics Project (HRP), iCub, Justin, KHR, MAHRU, Nexi MDS, REEM, Robonaut, Saika, Twenty-One, and Wakamaru—that demonstrated the state of the art in this domain. However, most of these were in the experimental stage, designed for R&D purposes, or at the prototype level.

They either had not been developed further for sustainable commercialization through mass production or did not target the same goal in terms of design and application as the Pepper robot, i.e., a robust, general-purpose, socially interactive humanoid robot that could be used in daily life for B2B and B2C by running different downloadable apps. Consequently, except for some interactive robots [such as AIBO (http:// www.sony-aibo.com), the companion robot pet series; NAO, the academic edition for university and laboratory research and education purposes; Paro (http://www.parorobots.com), the seal-shaped therapeutic robot; and Roomba (https://www. irobot.com), a robotics vacuum cleaner.], there was almost no strong precedent for a contemporary socially interactive humanoid robot industry. In that context, the initial ideas about the target market helped to identify some key design needs of the new Pepper robot, which was intended to inter- act with people by means of different modalities on a day-to- day basis.

Natural, multimodal interaction with robots has long been seen as a necessity for robots’ successful deployment in human environments [1]. In fact, the emergence of human– robot interaction (HRI) as a research domain was shaped around the need to “understand and shape the interactions between one or more humans and one or more robots” [2] in anticipation of the situations and applications when robots would be all around us and collaborating with us. Studies have shown that a robot’s physical embodiment and tactile communication can make it a more engaging and effective interaction partner than an animated character [5], [20] and that a physical robot is a better support for human learning gains compared to voice or video [21].

Furthermore, researchers have found that human-like appearance and interaction modalities are some features that the majority of study participants imagine companion robots should have—although people’s predilections vary with their individual personality differences [6]. In addition, as humanoid robots come to display body language and other abilities that embody human-like social signals, they become capable of being highly engaging [7]. It is no surprise, then, that Soft- Bank Robotics’s baby-sized humanoid robot NAO, [3], [13], with its multimodal interaction capabilities and easy-to-pro- gram interface [4], rapidly became a widely accepted robotic platform for HRI research. Such studies, along with use-case ideas (for B2B in SoftBank stores and later on for B2C, at least in Japan) and experience with the NAO robot, led to the design of the Pepper robot. Some of the principles behind its design are:

- A pleasant appearance

- Safety

- Affordability

- Interactivity

- Good autonomy.

Appearance

Appearance characteristics include size, shape, look, and voice. For the shape and size aspects, user feedback on NAO, as suggested previously, and a family resemblance to NAO were incorporated into Pepper. For the look, too exact a human likeness was avoided, with the aim of not falling into the “uncanny valley” [8]. The design also has a Japanese influ- ence, e.g., the manga-like big eyes and the hip joint that allows Pepper to bow upon meeting someone. The shape aimed to be gender neutral (with no explicitly defined gender charac- teristics) to avoid any stereotyping effect. Some studies already show that a person tends to rate a robot that looks like the opposite sex as more credible, trustworthy, and engaging [9] and that, if robots exhibit a gender, there is a stereotype- based bias in the expected services the robot should be pro- viding [10].

In addition, to further avoid stereotypes and unrealistic expectations, the robot’s voice was crafted to be childlike and androgynous. Our observations suggest that, for NAO, people generally call it him, but for Pepper, people address it as him, her, or it in almost equal measure. However, there might be some cultural factors involved, which require further investigation.

Safety

Safety is considered in various aspects of the Pepper body design. For example, the robot has no sharp edges, there are soft parts of the cover, and the center of mass is at the base to keep the robot from falling over. The motors are just powerful enough to move the joints but not so strong as to hurt some- one through an accidental blow. The robot is also equipped with bumpers. At the mechanical and hardware level, it uses software control to check the behavior of each joint and detect whether an external force is applied on the arm. Various other software and hardware safety mechanisms—some also in accordance with International Organization for Standardization (ISO) guidelines—are mentioned in the section “Safety: A Must-Have Feature at Different Levels.”

Affordability

To ensure affordability, only the necessary components, sensors, and functionalities were added to fulfill specific use-case needs. For example, the hand was deliberately not designed for heavy manipulation but only to be good enough for expressive interaction. The “Hardware Design Outline” section provides information on the hardware and sensors.

Interactivity

Interaction is one of the key features of the Pepper robot’s capabilities. The need for natural and intuitive interaction is at the heart of these, but the machine’s design also considers the real-life situations in which one means of communication might not always be particularly reliable or useful. Hence, Pepper has a multimodality of interaction interfaces. This includes a touch screen, speech, tactile head and hands, and light-emitting diodes (LEDs).

Several software components were developed to facilitate the necessary perception abilities and ensure a smooth HRI, including the capacity to recognize and respond to human emotions, a library of expressive gestures, and microlevel behaviors for displaying liveliness. To achieve human-like and graceful expressivity through body language, the kinematic structure of the robot was carefully designed with 17 joints. The three omnidirectional wheels help in achieving smooth movement and support the realization of local, small dis- placements in more natural ways. The “Degrees of Freedom and Actuators” section provides information on the design kinematics, and the section “Multimodality of Natural Inter- action at the Core” discusses the interaction multimodality.

Autonomy

Long-term autonomy is another important requirement, so the robot can serve for an entire workday in SoftBank stores without recharging or intervention. Therefore, the whole system was designed to balance the software and hardware loads and achieve a battery life of up to 12 h. In addition, a specifically designed docking station for autonomous charging was developed. Furthermore, there are modules and apps for the robot to achieve behavioral autonomy in particular applications, reducing the need for human intervention. The sections “Support for Behavioral Autonomy” and “Basic Navigation and Manipulation Capabilities” provide pointers on the autonomy aspects.

Hardware Design Outline

The following specifications are based on Pepper version 1.8a.

Body and Computer

The robot’s hull is constructed of high-quality plastic, and many parts consist of soft plastics to reduce the risk of pinching during physical interaction and minimize damage if the machine should fall over. There are no external sharp edges. Tactile body parts composed of capacitive sensors indicate when the robot is touched.

Pepper has a height of 1,210 mm, a width of 480 mm, and a depth of 425 mm. Its weight is 28 kg. The robot is equipped with several LEDs to signal and support communication. These are software controlled to change colors and intensity. The machine has an Atom E3845 processor with a quad-core central processing unit (CPU) and a clock speed of 1.91 GHz. It has a 4-GB double-data-rate, type-three random-access memory and a flash memory of 32 GB embedded multimedia card, of which 24 GB are available for users.

Degrees of Freedom and Actuators

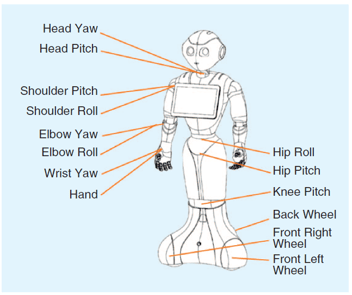

Figure 2. The Pepper robot’s joints.

As illustrated in Figure 2, the Pepper robot has 20 degrees of freedom (DoF) for motion in the whole body (17 joints) and omnidirectional navigation (three wheels). The DoFs include two in the head, two in each shoulder (left and right), two in each elbow (left and right), one in each wrist (left and right), one in each hand (five-fingered left and right hands), two in the hips, one in the knee, and three in the base. The omnidirectional wheels allow the robot to climb a 1.5-cm step and up to a 5° slope.

The actuators were designed by SoftBank Robotics based on brush dc motors in the upper limbs and a brushless dc motor in the lower limb. The joint-position sensor is magnetic rotary encoder-based, and there is a 12-b position sensor on each motor for the upper limbs. In almost all actuators (shoulder, elbow, neck, and leg), plastic bushings are used to ensure good guidance. They are lighter, smaller, and cheaper than ball bearings. With plastic bushings, there is a bit more friction, but it is below the acceptance threshold of the design needs for these joints. Ball bearings are used only for the wheel actuators.

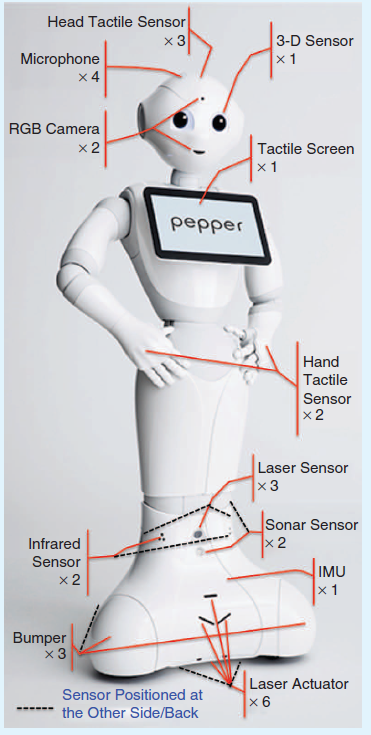

Sensors and Network

The Pepper robot has a range of sensors to allow it to perceive objects and humans in its surroundings and help the software components make sense of everything. These are illustrated in Figure 3 and outlined as follows:

Six-axis inertial measurement unit (IMU) sensor: The machine is equipped with an IMU composed of a three- axis gyrometer with an angular speed of ~500 °/s and a three-axis accelerometer with an acceleration of ~2 g. The output data enable an estimation of the base speed and attitude (yaw, pitch, and roll). Inside the inertial board, an algorithm is implemented to compute the base angle from the accelerometer and gyrometer.

Microphones: Pepper has four microphones in the head to provide sound localization. These have a sensitivity of 250 mV/Pa (±3 dB at 1 kHz) and a frequency range of 100 Hz to 10 kHz (−10 dB relative to 1 kHz).

Cameras and three-dimensional (3-D) sensor: The robot has two red-green-blue (RGB) cameras at the forehead and mouth positions. The resolution is 2,560 × 1,920 at 1 frame/s or 640 × 480 at 30 frames/s. One 3-D sensor is located behind the eyes. It provides an image resolution of up to 320 × 240 at 20 frames/s.

Tactile sensors, bumper sensors, and tablet: There are three tactile sensors: one in the head and one on top of each of the hands. The robot also has three bumper sensors, one on each wheel position. In addition, it has a tablet attached to its chest.

Laser sensing modules: These are composed of six laser line actuators (laser line generators) and three sensors. Three actuators are at the front of the robot to evaluate the ground before it. The other three are at the robot’s lower base to sense the surroundings. These three sensors are located at the front and the left and right sides.

Loudspeakers, sonar sensors, and infrared sensors: The Pep- per robot is also equipped with two loudspeakers, laterally placed on the left and the right sides of the head; two sonar sensors, one in front and one in back; and two infrared sensors at the base.

Network connectivity support: This includes Ethernet (1x RJ-45 10/100/1000 Base-T) and Wi-Fi (IEEE 802.11 a/b/ g/n; Security: 64/128-b: wired equivalent privacy, Wi-Fi protected access (WPA)/WPA2). In terms of the commu- nication protocol among components, RS-485 is used between the motor/sensor board and the internal comput- er, and communications device class-Ethernet control model is used on a universal serial bus cable between the tablet and the CPU.

Figure 3. The Pepper robot’s sensors. (Image courtesy of SoftBank Robotics.)

Software Features

Software Development Kits and Documentation

NAOqi is the name of the developed underlying operating system that runs on Pepper and controls it. NAOqi also provides a programming framework to develop applications on the robot. It addresses common robotics needs, including parallelism, resources, synchronization, and events. Different software development kits are provided to control Pepper and develop it: Python, C++, Java, JavaScript, and the Robot Operating System (ROS) Interface (http://wiki.ros.org/Aldeb-aran). Updated documentation is maintained online for developers (http://doc.aldebaran.com). There is also a dedicated developer community (https://developer.softbankrobot- ics.com) for support and a question-and-answer forum.

Multimodality of Natural Interaction at the Core

As mentioned, the core requirement of the initial B2B scenar- io was interaction with humans, and the capacity to perceive people is one of the main capabilities to achieve this. There- fore, the Pepper robot is equipped at both the hardware and software application programming interface (API) levels to provide good functionality for perceiving humans. The multi- modal perception components are primarily intended to dis- cern people’s presence and avoid collisions with the environment during body movement. The NAOqi’s People Perception module provides a list of inbuilt APIs to help in the development of high-level reasoning and behavioral capabilities.

One of the unique capabilities of the Pepper robot is dia- log-based interaction, which is crucial for delivering a natural and more gratifying HRI. A dialog-based interaction system can be easily created using the NAOqi ALDialog and Qichat modules. These provide various functionalities to devise and shape natural interaction, such as originating concepts and topics. The modules also serve as one of the easiest means to provide input to and command the robot through natural language.

In addition, the robot is equipped with the Animated Speech and Expressive Listening modules to display human- like gestures while speaking or listening. These, combined with Pepper’s 17 articulations, allow the machine to move flu- idly in ways that make it appear more naturally interactive, with the aim of achieving a high level of human–robot engagement.

As indicated earlier, in the “Hardware Design Outline” section, Pepper is equipped with various tactile areas, LEDs, and a tablet. These, combined with its dialog- and animation- based interaction capability, provide a unique capacity to interact with humans in a multimodal way using speech, expressive gestures, and a graphical user interface.

Support for Behavioral Autonomy

The Pepper robot has modules for various autonomous behaviors. It comes with the Autonomous Life module, the basic awareness capabilities of which keep the robot visibly active and seemingly alive. The original idea was to show that the robot is different from any other object by having it do something simple and appear active. This is also a way for the robot to demonstrate that it is in the present and ready to help or interact. It also gives the impression of having its own personality. For the developers, it is a source of inspiration for developing interesting, engaging, and fun behaviors.

Moreover, the Autonomous Life framework provides the creators with the possibility to further customize a particular type of advanced autonomy depending on the requirements and situation. It allows the Activities and Behaviors modules to be autonomously initiated when their specific Launch Trigger Conditions are satisfied via situation-assessment-based events.

Basic Navigation and Manipulation Capabilities

On the software level, Pepper is equipped with modules to achieve basic navigation and local obstacle-avoidance behav- ior. In addition, it is possible to use ROS-based modules for navigation-oriented perception and planning.

Pepper is not designed to manipulate objects as a core functionality. However, the fact that it is equipped with two arms, each with a five-fingered hand, and thanks to its appro- priate height, the robot can be used to achieve some basic object handover and tabletop manipulation tasks using NAOqi and the ROS (thanks to its compatibility with the ROS and the already established link of NAOqi through the ROS bridge).

Safety: A Must-Have Feature at Different Levels

Safety is one of the essential features of the Pepper robot, especially because it is mobile, has a body language, and is supposed to operate in a human-centered environment and interact with people in close proximity. The machine is equipped with a fall manager, a push recovery (balance manager) module, and an inverted pendulum control to stabilize itself. Thanks to these, Pepper is able to manage its balance not only during its own dynamic motion (sent by the software to the motors) but also when external forces are applied to it. Because of its three-wheel locomotion system and its very low center of gravity, the machine is designed not to fall as often as the bipedal NAO robot.

To ensure safety even during Pepper’s shutdown process (started by pressing the chest button), a two-step procedure has been adopted. First, the robot goes into a relaxed and safe position and then turns its motors off. It also has a stop button at the back. In addition, as described earlier, the robot has no sharp edges. If someone bumps into the machine, it tries to maintain its balance, moving, if needed, to recover from a strong push. If the robot is pushed hard enough to fall over, it cuts off all of its motors as it falls softly to the floor. Most of the weight is in the base near the wheels, so the upper body is relatively light, which mitigates a fall.

The ISO 13482 norm, section 5.10 (https://www.iso.org/ obp/ui/#iso:std:iso:13482:ed-1:v1:en), highlights the hazards due to robot motion and the corresponding safety require- ments. The Pepper robot complies with some of these recommendations. For example, it stops before colliding with any obstacles that are detected more than 1.5 cm away, and it is equipped with touch reflex, reduced movement speed, blind zone analysis, and a module to create a local map for safe navigation. Furthermore, to avoid dangerous movements in blind zones, the arm speed is lessened when moving inside an unknown zone. The robot is designed to detect a human or obstacle using anticollision software. Also, the base is too low (2 cm) to roll over a human foot. Pepper has a travel speed limit of 2 km/h and an emergency speed limit (push recovery) of 3.6 km/h.

The machine further complies with some of the inherently safe design recommendations of ISO 13482 by, for example, keeping the center of gravity of the personal-care robot low; ensuring that mechanical resonance effects cannot lead to instability; keeping the mass of the moving parts, especially the manipulator, as low as reasonably practicable; and using materials or structures to reduce impact forces. In addition, the safety policy also considers having a failsafe system if the robot should lose control, so it would not crash into a person or its surroundings. To achieve this, a hardware-level security feature, employing a brake system and elastomer in the hip, and a software-level precaution that stops the robot in a stable position in safe mode are implemented.

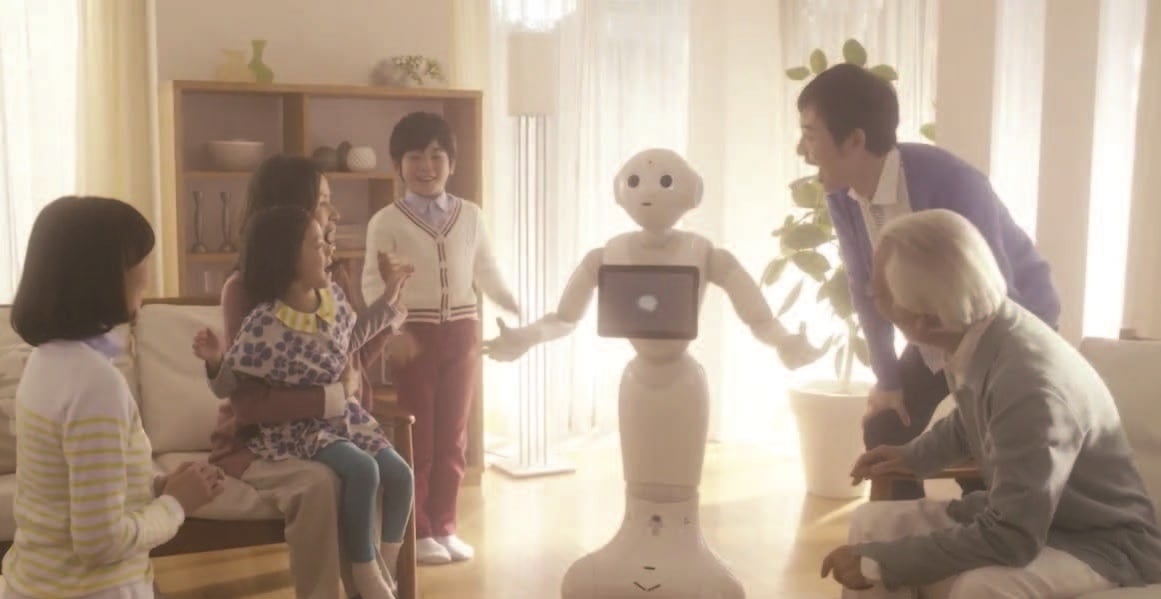

Application Potential and Acceptance

Figures 4 and 5 illustrate some of the use cases of the Pepper robot. The platform, by design, supports creating and running various apps, which can be developed for a large number of domains, e.g., health care, education, entertainment, and business. About 10,000 Peppers have already been sold, mainly in Japan. Some 3,000 of them are serving in various B2B applications: Pepper welcomes customers in SoftBank shops, sushi bars, clothing stores, and Nespresso boutiques. Roughly 7,000 of the robots are with consumers who want to experience life with a robot. In Europe, successful Pepper trials have been performed in French railway stations, at Carrefour supermarkets, at health-care and elder-care facilities, and on Costa Cruise ships. The robot is also available and used by academics and business partners in Europe. In the United States, Pepper can be found, for instance, in San Francisco’s Westfield mall.

|

(a)

|

(b)  |

Figure 4. Use cases of the Pepper robot in (a) public and (b) home environments.

Because knowledge about robots will become increasingly necessary in school systems, approximately 2,000 robots have been provided to educational institutions in Japan to support the teaching of robot programming. One of SoftBank Robotics’s ambitions is the involvement of Pepper-like social robots for addressing societal needs, for which the exploration of different use cases (also through various collaborations) is under way. A few of those are outlined in the following.

(a)  |

(b)  |

Figure 5. The application of the Pepper robot in (a) elder care and (b) child education.

User-Centeredness and Research Community Involvement

Considering end users’ and other stakeholders’ feedback is important for discovering the immediate scientific and R&D challenges to be solved for the greater societal impact of Pep- per-like social robots and their accompanying technology. A huge database is evolving based on end user and business partner feedback from B2C and B2B deployments. In addition, expert feedback from other stakeholders, including academics and researchers in various collaborative projects, has been collected.

For example, in two European Union (EU) projects—the MultiModal Mall Entertainment Robot (http://mummerproject.eu) and the Culture Aware Robots and Environmental Sensor Systems for Elderly Support (http://caressesrobot.org/ en/)—the Pepper robot serves, respectively, as the basic plat- form for the use cases in a shopping mall (as the part of the codesign process) and in senior care and assisted-living facili- ties. Two other EU Horizon 2020 projects, safe robot naviga- tion in dense crowds (CROWDBOT) and advancing intuitive human-machine interaction with human-like social capabili- ties for education in schools (ANIMATAS) (http://www.animatas.eu), will be further exploring the social navigation and guiding aspects of autonomous robots, including Pepper, and the educational potential of socially interactive agents-in-the- loop, also including the Pepper robot.

Through projects like these, the robot, its capabilities, and its use potential can be iteratively improved. Such involve- ment by the scientific community is also elevating the state of the art, e.g., navigation capability [14], the robot’s interaction ability in public places [15], cultural awareness for HRI [16], and narrative-memory-based human–robot companionship [19]. As mentioned earlier, Pepper has also become a standard platform for the SSPL of the RoboCup @Home (http:// www.robocupathome.org) competition, in which various teams from all around the world investigate and demonstrate a range of the robot’s capabilities and functionalities as well as potential limitations and improvements.

As NAO and Pepper have the same development environment and similar upper-body kinematics, we can transfer a program or a module from one robot to the other. Because NAO is already being widely used in the scientific community, a yet bigger B2A ecosystem will be possible by incorporating Pepper for similar research purposes.

All such collaborative efforts not only show the exciting application and software improvement potential of the Pepper robot but also help to advance it at the hardware level. For example, there have been iterative enhancements of the hip actuator, tablet performance, integrated microphones, and so forth. With all of these, in a closed loop of interaction among different stakeholders including academics, research organizations, industry, and end users, Pepper is evolving and finding widespread applications in everyday life.

Mass Adaptation, Awareness, Ecosystem, and Policy Making

Affordability can be achieved through societal awareness and subsequent mass adoption of Pepper. This will require the creation of new and relevant applications for such sociable humanoid robots. Toward this end, Softbank Robotics is involved in facilitating a larger ecosystem of business partners, developers, and stakeholders through different pro- grams. For example, the Partner Program (https://www.ald. softbankrobotics.com/en/partners/partners-program), aims to support companies in designing and selling business solutions using SoftBank Robotics machines. In addition, Soft- Bank Robotics hosts events like Pepper World and Pepper Partner, in which business partners talk about and exhibit various application and use cases with the robot.

Two areas are equally crucial to pursue: 1) creating a general awareness and a bridge among industry, academics, research organizations, end users, and policy makers and 2) advancing the state of the art at the intersection of robotics, artificial intelligence (AI), the Internet of Things (IoT), and societal needs. To fully realize the positive potential of social robots, it is strategically important to bring these two areas into the mainstream agenda of policy makers and researchers. In this regard, some ongoing efforts in Europe are significant, e.g., a public–private partnership between euRobotics Association Internationale sans but Lucratif (https://www.eu- robotics.net/), an international nonprofit association for stakeholders in European robotics, and the European Com- mission, through the mechanism of SPARC (http://www.sparc-robotics.net/).

As one of the largest civilian-funded robotics innovation programs in the world, this partnership aims to shape the future of robotics in Europe by developing recommendations to the European Commission within the area of robotics under Horizon 2020. One way to provide the input needed to connect different stakeholders and positively influence policy making is through involvement in the partnership’s various topic groups (https://www.eu-robotics.net/eurobotics/topic-groups-/index.html), such as Socially Intelligent Robots and Societal Applications; Natural Interaction with Social Robots; AI and Cognition in Robotics; Standardization, Benchmarking, and Competitions; and Ethical-Legal- Socio-Economic Issues, to name a few.

In addition, the SoftBank Robotics developer program (https://developer.softbankrobotics.com) aims to connect with developers, support their work on the robot, and create a B2D ecosystem. Programming and app development events, such as Hackathon 2018 (https://www.ald.softbankrobotics. com/en/hackathon2018), which has the theme “Pepper for Well-Being,” help involve and generate awareness among yet other sorts of stakeholders, e.g., experts in user experience and marketing. The teams explore and create inspiring and practicable applications using Pepper. Such efforts also try to incorporate new technologies relating to areas such as the IoT and Android tablets and to explore avenues for societal applications of the robot.

Future Prospects and Grand Challenges

The development of Pepper was a great learning experience. Ongoing iterative improvements of the robot are taking into account feedback from users and the scientific community and widening its scope to integrate with new technologies. Pepper is the first of its kind to create an avenue for a new generation of personal and service robots that are also mass- produced.

However, to achieve future success for such general purpose sociable robots, there is still a long way to go. Such machines must behave in a socially accepted and expected manner. Taken all together, their need for robust perception in real environments, the constraints of perceiving and acting in real time with available resources, and the necessity to dynamically interact with different kinds of real users pose key challenges for developing a coherent scientific and functional framework, which still requires deep investigation from various angles. In this regard, some directions to explore include connectivity, learning, cloud-based collective intelligence to develop the robots’ social intelligence and pro- active behavior [11], [17] and understanding human–robot engagement [22].

Another need is to provide users with natural and intuitive ways to teach the robot new behaviors, making it more individually useful, personalizable, and adaptable for different domains [18]. Some of the social, legal, and ethical concerns in the future might involve privacy versus an owner’s commands versus social accountability and ethics [12], for which the involvement of a larger multidisciplinary community is much needed. With everyone’s combined efforts, however, we hope that a future success story awaits in the next big techno- logical revolution for social robots.

Acknowledgments

We would like to acknowledge Jérôme Monceaux, Vincent Clerc, Alec Lafourcade, Ludovic Houchu, Julien Serre, Hayas- hi Kaname, Akiho Shibata, Hideki Okada, and all of the staff members of SoftBank Robotics for their great contributions to the Pepper vision. This work was partially supported by the Romeo2 project (http://www.projetromeo.com/) and BPI- France in the framework of the Structuring Projects of Com- petitiveness Clusters; by the European Union’s Horizon 2020 project Culture Aware Robots and Environmental Sensor Sys- tems for Elderly Support (http://www.caressesrobot.org) under grant 737858; by the Ministry of Internal Affairs and Communication of Japan; and by the European Union’s Hori- zon 2020 project MultiModal Mall Entertainment Robot (http://www.mummer-project.eu) research and innovation program under grant 688147.

References

- Perzanowski, A. C. Schultz, W. Adams, E. Marsh, and M. Bugajs- ka, “Building a multimodal human-robot interface,” IEEE Intell. Syst., vol. 16, no. 1, pp. 16–21, 2001.

- A. Goodrich and A. C. Schultz, “Human–robot interaction: A survey,” Found. Trends Human–Comput. Interaction, vol. 1, no. 3, pp. 203–275, 2007.

- Gouaillier, V. Hugel, P. Blazevic, C. Kilner, J. Monceaux, P. Lafourcade, B. Marnier, J. Serre, B. Maisonnier, “Mechatronic design of NAO humanoid,” in Proc. IEEE Int. Conf. Robotics and Automation (ICRA), 2009, pp. 769–774.

- Pot, J. Monceaux, R. Gelin, B. Maisonnier, “Choregraphe: A graphical tool for humanoid robot programming,” in Proc. 18th IEEE Int. Symp. Robot and Human Interactive Communication (RO-MAN), 2009, pp. 46–51.

- D. Kidd and C. Breazeal, “Effect of a robot on user perceptions,” in Proc. 2004 IEEE/RSJ Int. Conf. Intelligent Robots and Systems (IROS), 2004, vol. 4, pp. 3559–3564.

- L. Walters, D. S. Syrdal, K. Dautenhahn, R. Te Boekhorst, and K.

- Koay, “Avoiding the uncanny valley: Robot appearance, personality and consistency of behavior in an attention-seeking home scenario for a robot companion,” Auton. Robots, vol. 24, no. 2, pp. 159–178, 2008.

- -W. Chang, J.-H. Lee, P.-Y. Chao, C.-Y. Wang, and G.-D. Chen, “Exploring the possibility of using humanoid robots as instructional tools for teaching a second language in primary school,” Educ. Technol. Soc., vol. 13, no. 2, pp. 13–24, 2010.

- Mori, “The uncanny valley,” K. F. MacDorman and N. Kageki, Trans., IEEE Robot. Autom. Mag., vol. 19, no. 2, pp. 98–100, 2012.

- Siegel, C. Breazeal, and M. I. Norton, “Persuasive robotics: The influence of robot gender on human behavior,” in Proc. IEEE/RSJ Int. Conf. Intelligent Robots and Systems (IROS), 2009, pp. 2563–2568.

- Tay, Y. Jung, and T. Park, “When stereotypes meet robots: The double-edge sword of robot gender and personality in human–robot interaction,” Comput. Human Behavior, vol. 38, pp. 75–84, Sept. 2014.

- K. Pandey, R. Gelin, M. Ruocco, M. Monforte, and B. Siciliano, “When a social robot might learn to support potentially immoral behaviors in the name of privacy: The dilemma of privacy vs. ethics for a socially intelligent robot,” in Proc. 2017 Conf. Human-Robot Inter- action (Workshop on Privacy-Sensitive Robotics), pp. 1–4.

- Gelin, “NAO,” in Humanoid Robotics: A Reference, A. Goswami and P. Vadakkepat, Eds. Berlin: Springer-Verlag, 2017.

- Lafaye, D. Gouaillier, and P. B. Wieber, “Linear model predictive control of the locomotion of Pepper, a humanoid robot with omnidi- rectional wheels,” in Proc. 14th IEEE-RAS Int. Conf. Humanoid Robots (Humanoids), 2014, pp. 336–341.

- E. Foster, R. Alami, O. Gestranius, O. Lemon, M. Niemelä, J-M Odobez, and A. K. Pandey, “The MuMMER Project: Engaging human- robot interaction in real-world public spaces,” in Proc. 8th Int. Conf. Social Robotics (ICSR), 2016, pp. 753–763.

- Bruno, N. Y. Chong, H. Kamide, S. Kanoria, J. Lee, Y. Lim, A. K. Pandey, C. Papadopoulos, I. Papadopoulos, F. Pecora, and A. Saffiotti, “Paving the way for culturally competent robots: A position paper,” in Proc. 26th IEEE Int. Symp. Robot and Human Interactive Communica- tion (RO-MAN), 2017, pp. 553–560.

- K. Pandey, “Socially intelligent robots: The next generation of consumer robots and the challenges,” in Advances in Intelligent Systems and Computing, vol. 665, G. Stojanov and A. Kulakov, Eds. Cham, Swit- zerland: Springer-Verlag, 2016, pp. 41–46

- F. Dominey, V. Paléologue, A. K. Pandey, and J. Ventre-Dominey, “Improving quality of life with a narrative companion,” in Proc. 2017 26th IEEE Int. Symp. Robot and Human Interactive Communication (RO-MAN), 2017, pp. 127–134.

- M. Lee, Y. Jung, J. Kim, and S. R. Kim, “Are physically embodied social agents better than disembodied social agents? The effects of physical embodiment, tactile interaction, and people’s loneliness in human–robot interaction,” Int. J. Human-Comput. Stud., vol. 64, no. 10, pp. 962–973, 2006.

- Leyzberg, S. Spaulding, M. Toneva, and B. Scassellati, “The physical presence of a robot tutor increases cognitive learning gains,” in Proc. 34th Annu. Meeting Cognitive Science Society (CogSci), 2012, pp. 1882–1887.

- Ben-Youssef, C. Clavel, S. Essid, M. Bilac, M. Chamoux, and A. Lim, “UE-HRI: A new dataset for the study of user engagement in spontaneous human-robot interactions,” in Proc. 19th ACM Int. Conf. Multimodal Interaction (ICMI), 2017, pp. 464–472.

Amit Kumar Pandey, Innovation Department, SoftBank Robotics Europe, Paris, France. E-mail: akpandey@ softbankrobotics.com.

Rodolphe Gelin, Innovation Department, SoftBank Robotics Europe, Paris, France. E-mail: rgelin @ softbankrobotics.com.

Ready to get started with your robot? click below to get get a free estimate, without any commitment"

Too much to read? Don't have time?

Too much to read? Don't have time?

.webp?width=124&height=124&name=image%20(1).webp)

.webp?width=169&height=87&name=image%20(2).webp)